INTRODUCTION

The result of an evaluation depends heavily on the attitude of the evaluator(s). After all, an evaluation often

assesses documents created by someone else. It usually results in a list of findings intended for the author of the

document in question. Depending on how the findings are recorded or communicated, the author may feel ‘attacked’.

Chances are that this will result in a negative perception of the evaluation process. As such, it is important to

realise that the author did not intend to write things down ‘incorrectly’ on purpose. It is also important to be aware

that the evaluation process’ final goal is to deliver the best possible end product together. Evaluation techniques are

very well suited to improve the quality of products. This applies not only to the evaluated products themselves, but

also to other products. For instance, the findings from the evaluation process may cause the development process to

implement process improvements.

Research has shown, however, that evaluation processes – despite their proven value – are not always implemented or

executed seamlessly. A few causes that a survey brought to light:

-

56% of the authors found it hard to disengage from their work

-

48% of the evaluators had not received the correct training

-

47% of the authors were afraid that the data would be used against them.

After a general description of evaluation, this chapter discusses three techniques in greater detail: inspections,

reviews, and walkthroughs. The last section of this chapter contains an evaluation technique selection matrix that can

be used to choose a technique.

“IEEE Std 1028-1997 Standard for Software Reviews” [IEEE, 1998] was used as a basis for this chapter, supplemented with

experiences from practice.

Tips - Evaluation is one of the points of concern in the “test process improvement” (TPI)

model [Koomen, 1999]. The TPI book contains some suggestions for implementing and improving the evaluation process

(section 7.19 “Evaluation”).

EVALUATION EXPLAINED

Intermediary products

Various intermediary products are developed in the course of the system development process. Depending on the

selected method, these have a certain form, content and inter-relationship, on the basis of which they can be

evaluated.

The evaluation of intermediary products does not have to limit itself to the development documents. Evaluation can

occur at all levels of documentation:

Evaluation site

Beside the quality improvement described earlier, another important aspect of evaluation is that defects can be found much

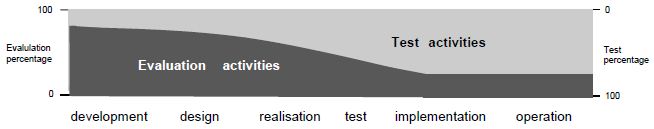

earlier in the (development) process than by testing (see figure 1). This is because evaluation assesses intermediary

products and not, as testing does, the end products. And the earlier a defect is found, the more easily and economically it

can be repaired [Boehm, 1981].

Figure 1: Evaluation and testing versus system development phasing

Evaluation has demonstrated time and again to be the most efficient and effective way to eliminate defects from the

intermediary products of a system (see the practical examples in Prepare (AST)). Evaluation is also a process that is easy to set up because, for

instance, no software has to be run and no environment has to be created. As such, there are adequate reasons to set up

an evaluation process.

In practice, however, good intentions can become mired in practical execution problems: “Can you have a look at these 6

folders with the functional specifications? Please get them back to us the day after tomorrow, because that is when we

start programming.”

Formal evaluation techniques in the form of process descriptions and checklists, help get this under control. A formal

evaluation technique is characterised by the fact that several persons evaluate as a team, defects are documented (see

Tips - Evaluation Techniques), and there are written procedures to execute the

evaluation activities.

Tips - The evaluators detect many defects; often it is decided to enter these in a defect

administration. At the end of the evaluation meeting, rubrics such as status, severity, and action have to be updated

for the defects. These are labour and time-intensive activities that can be minimised as follows:

-

Evaluators record their comments on an evaluation form.

-

These comments are discussed during the evaluation meeting.

-

At the end of the meeting, only the most important comments are registered in the defect administration as a

defect. Please refer to Defects Management, for more information on the defect procedure.

An evaluation form contains the following aspects:

Per form:

-

Identification of evaluator

-

Identification of the intermediate product

-

document name

-

version number/date

-

Evaluation process data

-

number of pages evaluated

-

evaluation time invested

-

General impression of the intermediate product

Per comment:

-

unique reference number

-

Clear reference to the place in the intermediate product to which the comment relates (e.g. by specifying the

chapter, section, page, line, requirement number)

-

Description of the comment

-

Importance of the comment (e.g. high, medium, low)

-

Follow-up actions (to be filled out by the author with e.g. completed, partially completed, not completed)

Note: This form is useful for reviewing documents in particular. When reviewing software or for a walkthrough of a

prototype, the aspects on the form need to be modified.

There are various evaluation technique intensities. This is important because not every intermediary product needs to

be evaluated equally intensively. This is why an evaluation strategy is often included in the master test plan. Like a

test strategy, an evaluation strategy is extremely important when aiming to deploy the effort in an optimal way, as

well as a means of communication towards the client. While establishing the strategy, it is analysed what has to be

evaluated where and how often in order to achieve the optimal balance between the required quality and the amount of

time and/or money that is required. Optimisation aims to distribute the available resources correctly over the

activities to be executed.

Structure of evaluation technique guideline

After a few general tips, the sections below describe the techniques according to the following structure:

-

Introduction - Description of the goal of the technique and the products to which the technique can be applied.

-

Responsibilities - Description of which roles are allocated to participants.

-

Entry criteria - Description of the necessary products and conditions that must be met before evaluation can

begin.

-

Procedures - Description of:

-

How to organise an evaluation (planning)

-

The method to be used

-

The required preparation of the participants

-

How evaluation results are discussed and recorded

-

How rework is done and checked.

-

Exit criteria - Description of the deliverable documents and the conditions under which the product can exit

the evaluation process.

Tips - When using an evaluation technique, practice has shown that there are several critical

success factors:

-

The author must be released from other activities to participate in the evaluation process and process the results.

-

Authors must not be held accountable for the evaluation results.

-

Evaluators must have attended a (short) training in the specific evaluation technique.

-

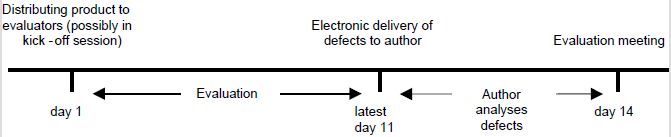

Adequate (preparation) time must be available between submission of the products to the evaluators and the

evaluation meeting (e.g. two weeks). If necessary, the products can be made available during a kick-off. See

figure 2 for a possible planning of the evaluation programme.

-

The minutes secretary must be experienced and adequately instructed. Making minutes of all defects, actions,

decisions and recommendations of the evaluators is vital to the success of an inspection process. Sometimes the

author takes on the role of minutes secretary, but the disadvantage is that the author may miss parts of the

discussion (because he must divide his attention between writing and listening).

-

The size of the intermediary product to be evaluated and the available preparation and meeting time must be tuned

to each other.

-

Make clear follow-up agreements. Agree when and in which version of the intermediary product the agreed changes

must be implemented.

-

Feedback from the author to the evaluators (appreciation for their contribution).

Figure 2: Possible planning of an evaluation programme

The planning above does not keep account of any activities to be executed after the evaluation meeting. These might

involve: modifying the product, re-evaluation, and final acceptance of the product. If relevant, such activities must

be added to the planning. The activities ‘modifying the product’ and ‘re-evaluation’ may be iterative.

Practical example - In an organisation in which the time-to-market of various modifications

to the information system had to be short, the modifications were implemented by means of a large number of short-term

increments.

The testers were expected to review the designs (in the form of use cases). A lead time of two weeks for a review

programme was not a realistic option. To solve the problem, the Monday was chosen as the fixed review day. The ‘rules

of the game’ were as follows:

-

The designers delivered one or more documents for review to the test manager before 9.00am (if no documents were

delivered, no review occurred on that Monday).

-

All documents taken together could not exceed a total of 30 A4-sized pages.

-

The test manager determined which tester had to review what.

-

The review comments of the testers had to be returned to the relevant designer before 12.00pm.

-

The review meeting was held from 2.00pm to 3.00pm.

-

The aim was for the designer to modify the document the same day (depending on the severity of the comments, one

day later was allowed).

INSPECTIONS

There are various forms of the inspection process. This section describes a general form. For a specific, and indeed

most common form, please refer to [Gilb, 1993] and [Fagan, 1986].

Introduction

A formal work method is followed when executing an inspection, with products being read thoroughly by a group of

experts. This can be any of the documents listed in section Evaluation explained. The inspectors look

at deviations from predefined criteria in these products, which must be 100% complete. In addition to determining

whether the solution is adequately processed, an inspection focuses primarily on achieving consensus on the quality of

a product. Aspects for evaluation are e.g. compliance of the product with certain standards, specifications,

regulations, guidelines, plans and/or procedures. Often the inspection criteria are collected and recorded in a

checklist. The aim of the inspection is to help the author find as many deviations as possible in the available

time.

Responsibilities

Three to six participants are involved in an inspection. Possible roles are:

-

Moderator - The moderator prepares and leads the inspection process. This means planning the process, making

agreements, determining product and group size, and bearing responsibility for recording all defects detected

during the inspection process.

-

Author - The author requests an inspection. During the inspection process, he explains anything that may be

unclear in the product. The author must also ensure that he understands the defects found by the inspectors.

-

Minutes secretary - The minutes secretary records all defects, actions, decisions and recommendations of the

inspectors during the inspection process.

-

Inspector - Prior to the inspection meeting, the inspector tries to find and document as many defects as

possible. Often an inspector is asked by the moderator to do so from one specific perspective, e.g. project

management, testing, development or quality (see text box “perspective-based reading”). An inspector can also

be asked to check the entire product on the correct use of a certain standard.

With the exception of the author, all participants may fulfil one or more of the above roles. All participants (except

the author) at least fulfil the role of inspector. None of the participants may be the superior of one of the other

participants because there is a risk that participants will restrain themselves when reporting their findings.

Perspective-based reading

Participants in an evaluation activity often assess a product with the same objective and from the same

perspective. Often, a systematic approach during preparation is missing. The risk is that the various participants find

the same type of defects. Using a good reading technique can result in improvement. One technique commonly used is the

perspective-based reading (PBR) technique. Properties of PBR are:

-

Participants evaluate a product from one specific perspective (e.g. as developer, tester, user, project manager).

-

Approach based on the what and how questions. It is laid down in a procedure what the product parts are that must

be evaluated from one specific perspective and how they must be evaluated. Often a procedure (scenario) is created

for each perspective.

PBR is one of the ‘scenario-based reading’ (SBR) techniques. Defect reading, scope reading, use-based reading and

horizontal/vertical reading are other SBR techniques that participants in an evaluation activity can use. Please refer

to e.g. [Laitenberger, 1995] and [Basili, 1997] for more information.

Entry criteria

The inspection process can be started when:

-

The aim of the inspection and the inspection procedure are clear.

-

The product is 100% complete (but not yet definitive), and spelling errors have been eliminated, it complies

with the agreed standards, accompanying documentation is available, references are correct, etc.

-

The checklists and inspection forms to record defects to be used during the inspection are available.

-

(Possibly) a list with known defects is available.

Procedures

The inspection process can be split up into the phases planning, kick-off, preparation and execution:

Planning

When the product for inspection complies with the entry criteria, the moderator organises an inspection meeting. This

means, among other things: determining date and location of the meeting, creating a team, allocating roles,

distributing the products for inspection (delivered by the author) to the participants, and reaching agreements on the

period within which the author must receive the defects found.

Kick-off

The kick-off meeting is held before the actual inspection. The meeting is optional and is organised by the moderator

for the following reasons:

-

If participants are invited that have not yet participated in an inspection before, the moderator provides a

summary introduction to the technique and the method used.

-

The author of the product for inspection describes the product.

-

If there are improvements or changes in the work method to be used during the inspection, they are explained.

If a kick-off is held, the documents are handed out and the roles can be explained during this meeting.

Preparation

Good preparation is necessary to ensure the most efficient and effective possible inspection meeting. During the

preparation, the inspectors look for defects in the products for inspection and record them on the inspection form. The

moderator collects the forms, classifies the defects, and makes the result available to the author in a timely manner

so that the latter can prepare.

Execution

The aim of the inspection meeting is not just recording the defects detected by the participants in the preparation

phase. Detecting new defects during the meeting and the implicit exchange of knowledge are other important objectives.

During the meeting, the moderator ensures that the defects are inventoried page by page in an efficient manner. The

minutes secretary records the defects on a defect list. Cosmetic defects are not generally registered, but handed over

to the author at the end of the meeting.

At the end of the meeting, the moderator goes through the defect list prepared by the minutes secretary with all

participants to ensure that it is complete and the defects are recorded correctly. Inaccuracies are corrected

immediately, but due to efficiency the idea is not to open a discussion on defects and (possible) solutions.

Finally, it is determined whether the product is accepted as is (possibly with some small changes), or if the product

is accepted after changes and a check by one of the inspectors, or if the product must be submitted to re-inspection

after changes.

Based on the defect list and agreements made during the meeting, the author adapts the product.

Exit criteria

The inspection process is considered complete when:

-

The changes (rework) are complete (check by moderator).

-

The product has been given a new version number.

-

All changes made are documented in the new version of the inspected product (change history).

-

Any change proposals with respect to other products, that have emerged from the inspection process, have been

submitted.

-

The inspection form has been completed and handed over to the quality management employee responsible, among other

things for statistical purposes.

REVIEWS

There are various review types, such as: technical review (e.g. selecting solution direction/alternative), management

review (e.g. determining project status), peer review (review by colleagues), and expert review (review by experts).

This section describes the review process in general.

Introduction

A review follows a formal method, where a product (60-80% complete) is submitted to a number of reviewers with the

question to assess it from a certain perspective (depending on the review type). The author collects the comments and

on this basis adjusts the product.

A review focuses primarily on finding courses for a solution on the basis of the knowledge and competencies of the

reviewers, and on finding and correcting defects. A review of a product often occurs earlier on in the product’s

lifecycle than a product inspection.

Responsibilities

The minimum number of participants in a review is three. This may be less for a peer review, e.g. when reviewing the

code, which is usually done by one reviewer. Possible roles are:

-

Moderator - The moderator prepares and leads the review process. This means planning the review process, inviting

the reviewers, and possibly allocating specific tasks to the reviewers.

-

Author - The author requests a review and delivers the product for reviewing.

-

Minutes secretary - The minutes secretary records all defects, actions, decisions and recommendations of the

reviewers during the review process.

-

Reviewer - Prior to the review meeting, the inspector tries to find and document as many defects as possible.

All participants may fulfil one or more of the above roles. All participants (except the author) at least fulfil the

role of reviewer.

Entry criteria

The review process can be started when:

-

The aim of the review and the review procedure are clear.

-

The product for review is available. A comprehensive “entry check” is not necessary because the product is only

60%-80% complete.

-

The checklists and inspection forms to record defects to be used during the review are available.

-

(Possibly) a list with known defects is available.

Procedures

The review process can be split up into the phases planning, preparation and execution:

Planning

When the product for review complies with the entry criteria, the moderator organises an review meeting. This means,

among other things: determining date and location of the meeting, inviting reviewers, distributing the products for

review to the participants, and reaching agreements on the period within which the author must receive the defects

found.

Preparation

Good preparation is necessary to ensure the most efficient and effective review meeting. During the preparation, the

reviewers look for defects in the products for review and record them on the review form. The moderator collects the

forms, preferably classifies the defects, and makes the result available to the author in a timely manner so that the

latter can prepare.

Execution

At the beginning of the meeting, the agenda is created or adjusted under the leadership of the moderator.

The most important defects are placed at the top of the agenda. The objective is to reserve adequate time in the agenda

to discuss these defects. Since the product is not yet complete, little to no attention is devoted to less important

defects (contrary to the inspection process). The minutes secretary records the defects on a defect list. Often an

action list is also compiled.

Finally, the moderator may recommend an additional review, determined among other things by the severity and number of

defects. Based on the defect/action list made during the meeting, the author adapts the product.

Exit criteria

The review process is considered complete when:

-

All actions on the action list are “closed” and all changes based on important defects are incorporated into the

product (check by moderator).

-

The product is approved for use in a subsequent phase/activity.

WALKTHROUGHS

A walkthrough is a method by which the author explains the contents of a product during a meeting. Several

different objectives are possible:

-

Bringing all participants to the same starting point, e.g. in preparation for a review or inspection process.

-

Transfer of information, e.g. to developers and testers to help them in their programming and test design work,

respectively.

-

Asking the participants for additional information.

-

Letting the participants choose from the alternatives proposed by the author.

Introduction

A walkthrough can be held for any of the documents listed in Evaluation explained when they are

50-100% complete.

Responsibilities

The number of participants in a walkthrough is unlimited if the author wishes to explain his product to certain groups,

e.g. by means of a presentation. For an interactive walkthrough, we recommend a group size of two to seven persons.

Possible roles are:

-

Moderator - The moderator prepares the walkthrough. This means planning the walkthrough, inviting the participants,

distributing both the product and a document explaining the purpose of the walkthrough.

-

Author - The author requests a walkthrough and explains the product during the walkthrough.

-

Minutes secretary - The minutes secretary records all decisions and identified actions during the walkthrough.

He also records findings (such as conflicts, questions and omissions) and recommendations from the participants.

-

Participant - The participant’s role depends on the purpose of the walkthrough. It can vary from listener to

actively proposing certain solutions.

All participants may fulfil one or more of these roles. The author can act as the moderator. Both the moderator and the

author may fulfil the role of minutes secretary.

Entry criteria

The walkthrough can be started when:

-

The purpose of the walkthrough is clear.

-

The subject (product) of the walkthrough is available.

Procedures

The walkthrough process can be split up into the phases planning, preparation and execution:

Planning

When the entry criteria are met, the moderator plans the walkthrough, invites participants, distributes the product and

explains the purpose of the walkthrough to the participants.

Preparation

Depending on the purpose of the walkthrough, the participants may submit defects but usually this does not happen (for

example because the purpose is knowledge transfer). If defects are submitted, the moderator collects them and makes

them available to the author. The author determines how the product is presented, e.g. relating to any defects,

sequentially, bottom up or top down.

Execution

At the beginning of the meeting, the moderator explains the purpose of the walkthrough and the procedure to be

followed. The author then provides a detailed description of the product; the participants can ask questions, submit

comments and criticism, etc during or after the description.

Decisions, identified actions, any defects, etc are recorded by the minutes secretary. At the end of the walkthrough,

the moderator goes through the recorded decisions, actions and other important information with all those present for

verifi cation purposes.

Exit criteria

The walkthrough is considered complete when:

-

The product has been described during the walkthrough.

-

Decisions, actions and recommendations have been recorded

-

The purpose of the walkthrough has been achieved.

EVALUATION TECHNIQUE SELECTION MATRIX

As with testing, every organisation or project organises evaluation processes in their own way. This means that there

is no single uniform description of the evaluation techniques, nor can we specify in which situation a specific

technique is most suitable. However, the table on the next page (also to be found at www.tmap.net) may offer some assistance in selecting a technique:

|

Aspect

|

Inspection

|

Review

|

Walkthrough

|

|

Area of

application

|

In addition to determining whether the

solution is adequately processed, focuses primarily on achieving consensus on the quality of a

product.

|

Focuses primarily on finding courses

for a solution and on finding and correcting defects. Review types include technical, management, peer

and expert review.

|

Focuses on choosing from alternative

solutions, completing missing information, or knowledge transfer.

|

|

Products to be

evaluated

|

For example: functional/technical

design, requirements document, management plan, development plan, test plan, maintenance documentation,

user/installation manual, software, release note, test design, test script, prototype, and screen

print.

|

|

Group size

|

Three to six participants

|

At least three participants.

|

Two to seven participants in

alternative version to unlimited for presentation version.

|

|

Preparation

|

Strict management of the aspects to be

evaluated by the inspectors. Defects

(based on checklists, standards, etc.)

described

by inspectors to be delivered to the

author before the meeting.

|

Reviewers largely determine themselves

which aspects they want to evaluate. Defects of reviewers to be delivered to the author before the

meeting.

|

From being informed of the product to

delivering defects.

|

|

Product status

and size

|

Product is 100% complete, not yet

definitive and limited in size (10-20 pages).

|

Product is 60%-80% complete and has a

variable size.

|

Product is 50%-100% complete and has a

variable size.

|

|

Benefits

|

High quality, incidental and

structural quality improvement.

|

Limited labor intensity, early

involvement of reviewers.

|

High learning impact, low labour

intensity.

|

|

Disadvantages

|

Labor-intensive (costly), relatively

long lead time.

|

Subjective, possible disturbance of

collegial relationships.

|

Risk of ad-hoc discussions because

participants are often not prepared.

|

Table 1: Evaluation technique selection matrix

|